LLM powered agents

Disclaimer: This post aims to guide you through LLM powered autonomous agent systems as if you were back to high school. If I haven’t made some things clear for you, then it’s probably my fault so leave me a message via email. If this seems to be too easy, I recommend you check out Lilian Weng’s blog post.

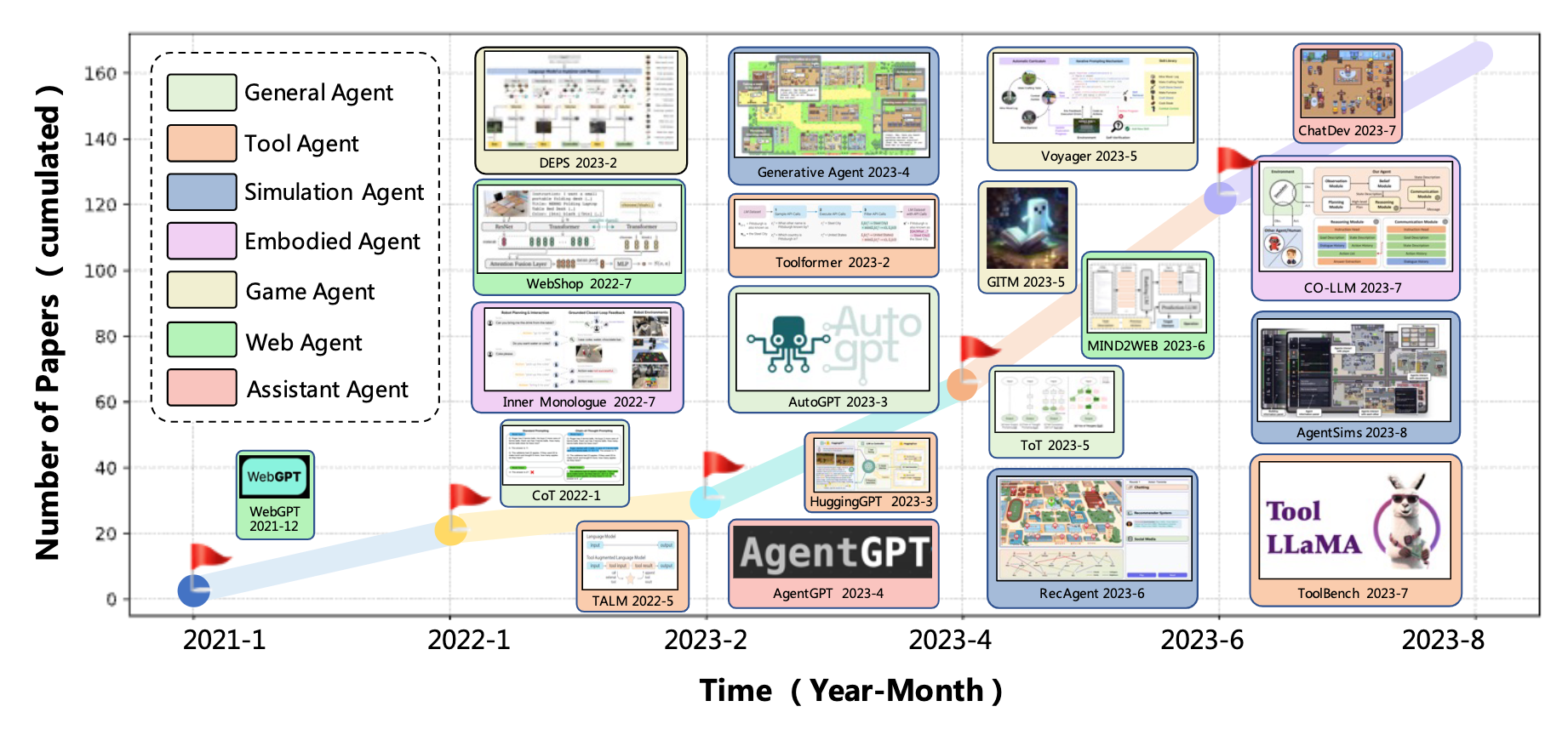

It’s been almost a year since ChatGPT was introduced to the world. It has been dominating the LLM consumer market ever since its appearance even though many other products have popped up. (Adding up the usage of 2nd and 3rd place products isn’t even close to ChatGPT’s alone.) But we’re humans, we demand more as we get used to something. Even though ChatGPT might be at its pinnacle, people are starting to expect more from it. There are many problems when executing multiple tasks with a single LLM—this was briefly talked about in our previous post. That’s when LLM powered agents come in. The concept has been continuously gaining interest ever since pioneering projects like AutoGPT and BabyAGI have caught the minds of tech enthusiasts[1][2]. But what exactly is it?

What is a LLM powered agent (system)?

LLM powered(or based) agents are just a mimic of humans leveraging the power of LLMs[3]. The reasoning ability to tackle complex real world problems is not a unique ability of our own now. Ongoing studies show that LLMs have similar traits. For example, a recent paper from Anthropic suggests LLMs act in sycophantic manner due to human feedback being involved during its training process known as RLHF[4].

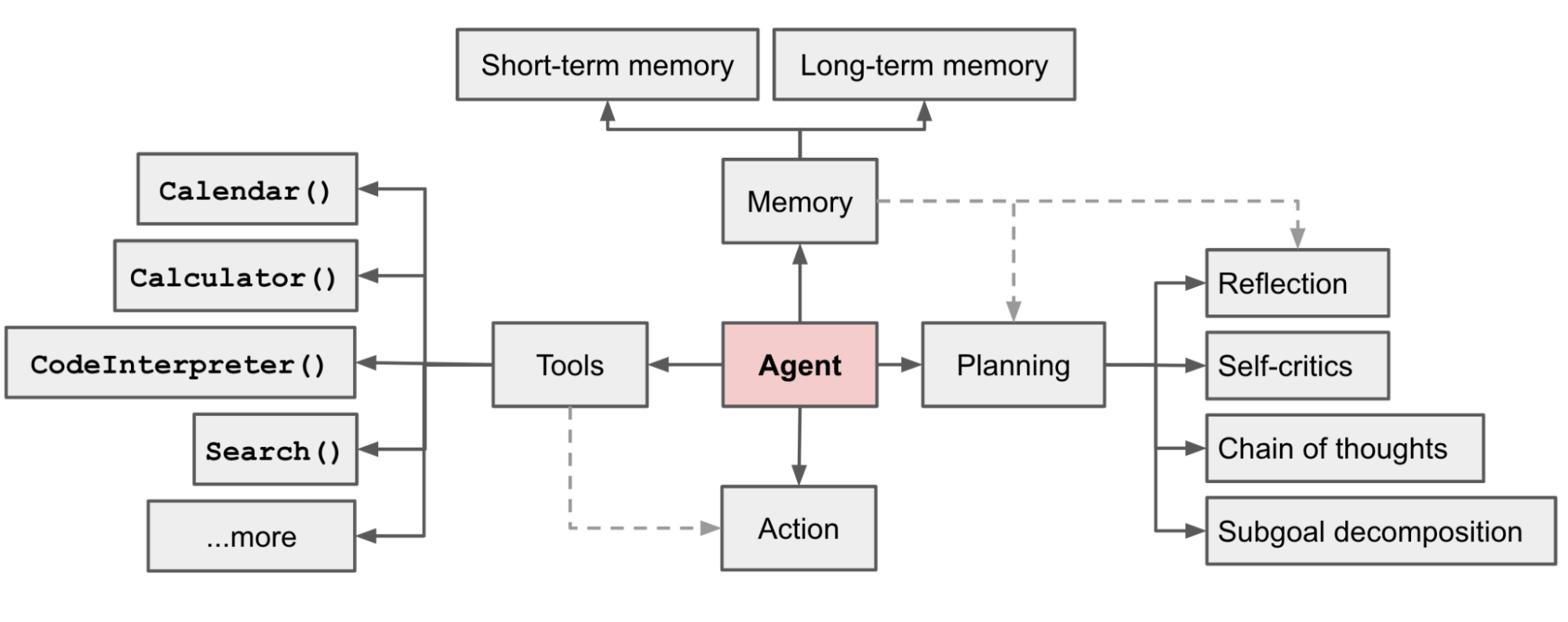

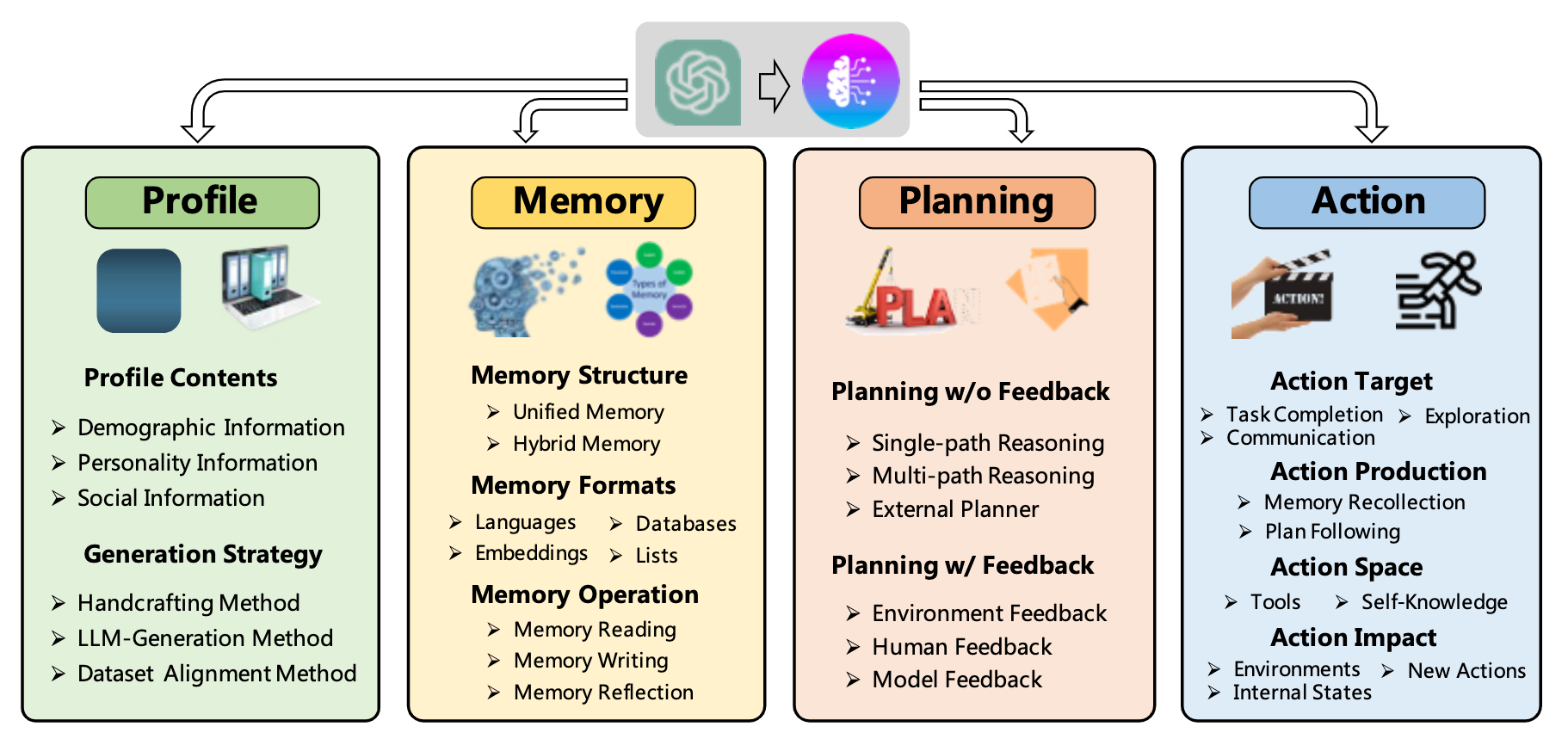

The field of studies for auto agents is still in its early stages, so discussions are going on everyday about it. There are different ways to decompose auto agents. Lilian Weng proposes a planning-memory-tool framework, dividing by its functionality. According to this, you could say the LLM acts as the brain, the vector store to be a long-term memory, and finally tools as the body. Wang et al., on the other hand, proposes a profile-memory-planning-action framework, focusing on the process of agent construction[5].

New decomposition method

At ASQ, we like to divide it from the user’s perspective by segmenting each phase of the agent system.

(1) Goal Specification

No matter how powerful language models become, ambiguous user inputs will always lead to general, irrelevant answers. For the model to be aligned to the user's intention, there can be two approaches.

First, instruction-tuning the model via RLHF—the baseline method for training raw foundation models (FM). This area is where endless competitions between FM providers will proceed. It is crucial for them since it is known that a pre-trained model that is trained again using RLHF scores better performance than pre-trained models with larger parameters[6].

Second, designing prompts to make the instruction-tuned model to work as intended. This is the area where users need to pay attention to. Thinking about your requirements to solve a task is crucial when it comes to designing good prompts. But it takes a lot of time and effort to define what must be done, even if you're a person with skills. You need to experiment prompts consistently to find out if the result meets your standards—read more about how to achieve this in our blog. For agents, specifying the objective is crucial since the whole operation trajectory will be dependent on it.

(2) Task Management

The reasoning technique for language models has been improving ever since day one. Many researchers have released marvelous papers like CoT and ReAct to demonstrate the abilities of these models[7][8]. Unfortunately, the reasoning power depends highly on the inference ability of language models—which is not a surprise—and quite frankly there are currently no other models that are a match for GPT-4. Again, this is the FM providers' territory.

We'll talk more about this in multi-agent systems. But for agent systems, a selection of appropriate agents must be coordinated in order to serve the user. They also need to individually construct a task chain—or could be referred as an action plan—iteratively, by reflecting back on previous thoughts and actions to fulfill the user's request.

(3) Execution Performance

As stated above, the inferencing power of an individual agent is what makes an agent so great, since they are able to make reasonable selection of tools to help them guide through the given task chain, as well as improving it by observing past actions.

Also, agents need sufficient amount of tools to select from. The toolset is needed in order to perform necessary actions like using built-in modules, other neural models, or 3rd party APIs.

(4) Revision via Feedback

Currently, most agent systems do not support a revision of fulfilled requests. It is also crucial that human users are able to communicate with them to help the system get re-aligned to the user's intention.

(5) Interface

This is where most projects fail since they're just mostly CLIs in the terminal. The best one I've seen so far is Perplexity with their generative UI, which is indeed what other AI companies—as well as us—should definitely pay attention to.

A state-of-the-art hybrid interface that is a mixture of conversational and visual will be necessary. The two interfaces will need to be synchronized to one another, to provide intuitive information to the user.

What's an example of this?

(1) AutoGPT

Developed by Toran Bruce Richards, it was initially released to the world on March 30th, 2023. It showed the LLM's capability to iteratively plan a task chain each step by reflecting on its past thoughts and actions based on the human feedback. However, it shows clear limitations when trying to solve tasks with higher complexity. You can find more about AutoGPT in our blog, too.

(2) AgentGPT

Developed by the Reworkd team, it was released on April 9th, 2023. They created a slick interface that many developers found to be neat. You can use a limited number of cycles for the agent when using a free plan. Also, they are currently funded by YC.

(3) SuperAGI

Released on May 15, 2023, SuperAGI aims to empower businesses by their open-source ecosystem.

Conclusion

Agents are indeed wonderful. It shows us a peek of what the future might look like—who knows if this is the key to AGI. However, the above projects are all using a single agent to resolve the user's problem. Fortunately, tasks that are relatively easy—to structure an action plan—can be tackled by them. But the problems that LLMs are having right now, are being directly projected into these projects—leading to clear limitations for the use cases of them.

So, the ASQ team is trying to tackle these issues one by one using multiple agents to collaborate with each other. Our view of the current problems that agent systems are having are stated above and we would love to hear other opinions on different perspectives. We'll come back with more great information about agents. Until then, be safe.

Reference

- Significant-Gravitas/AutoGPT: An experimental open-source attempt to make GPT-4 fully autonomous. (github.com)

- yoheinakajima/babyagi (github.com)

- Weng, Lilian. (Jun 2023). LLM-powered Autonomous Agents". Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/.

- Sharma, Mrinank, et al. "Towards Understanding Sycophancy in Language Models." arXiv preprint arXiv:2310.13548 (2023).

- Wang, Lei, et al. "A survey on large language model based autonomous agents." arXiv preprint arXiv:2308.11432 (2023).

- Ouyang, Long, et al. "Training language models to follow instructions with human feedback." Advances in Neural Information Processing Systems 35 (2022): 27730-27744.

- Yao, Shunyu, et al. "React: Synergizing reasoning and acting in language models." arXiv preprint arXiv:2210.03629 (2022).

- Wei, Jason, et al. "Chain-of-thought prompting elicits reasoning in large language models." Advances in Neural Information Processing Systems 35 (2022): 24824-24837.